Scalable IT infrastructure is an important feature offered by cloud services for small and medium businesses. Using the cloud, your business can upscale or downscale your resources on demand, while controlling unnecessary costs.

Scalable data infrastructure is particularly beneficial for businesses dealing with seasonal or cyclical demand. Retail companies, for example, can easily manage server demand during the holidays.

Below, Dev.Pro’s experts weigh in on best practices for scalability in cloud computing and how these approaches benefit consumers.

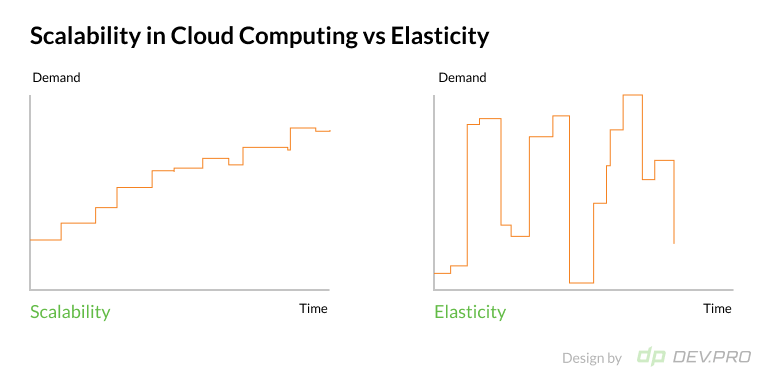

What is Cloud Scalability and Elasticity?

A few housekeeping points to get all readers on the same page first: let’s see how these two terms differ.

What is Scalability in Cloud Computing?

Scalability is the inherent capacity of a system to cater to growing demand by adding more or bigger computing resources.

By focusing on the scalability of your IT infrastructure when you plan your software architecture, you can buy cloud services that can manage an increase in users. This allows you to gradually build on your computing powers by adding more machines or using bigger ones, according to the increase in demand.

This term “scalability” was coined to describe the ideal state of the architecture that is growth-friendly for successful startups. Initially, specifically in the pre-cloud, and pre-SaaS era, it was expensive to plan for scaling. As a result, teams would start building on rigid systems that were tough to scale.

Now that we’ve covered the meaning of scalability in cloud computing, let’s find out why it’s different from elasticity.

What is Elasticity in Cloud Computing?

Elasticity is the inherent capacity of a system to cater to a constantly changing demand level with significant unpredictable dips and peaks.

Elasticity refers to the ability of a system to drastically change computing capacity to match an ever fluctuating workload. Systems are configured so that only clients are only charged for consumed instances, regardless of sudden bursts in demand. In an elastic system, you pay for what you consume.

Today, the term “scalability” is often used interchangeably with “elasticity.” As in the text below.

Types of Scalability in Cloud Computing

There are two main types of scaling in cloud computing: vertical and horizontal. There is also a hybrid version called diagonal scaling.

Vertical Scaling [Bigger Machines]

Vertical scaling is the process of accommodating growing capacity demand by upgrading existing resources [for example, increasing disk capacity or expanding Random Access Memory and Central Processing Units capacity].

Vertical scaling is mostly used by small and medium-sized businesses and microbusinesses, or at the initial stage of an application roll-out.

- This type is more consistent, as there are no load balancers, and it’s faster.

- It’s limited in scaling capacity and it presents a single point of failure, as all processing happens on one machine.

Horizontal Scaling [More Machines]

Horizontal scaling is the process of accommodating growing capacity demand by adding more machines without upgrading them first.

Horizontal scaling is used by enterprise level companies and complex applications.

- This type is resilient to failures and has unlimited scaling possibilities.

- It’s slower and less consistent, as computing and storage happens on different machines.

Diagonal Scaling [Bigger Machines + More of Them]

Diagonal type is a hybrid approach where you increase the compute capacity of every single machine to its maximum, but then buy more of them too. This offers the benefits of both approaches while minimizing the risks.

Cloud Environments in Cloud Computing

Scalability in cloud computing is still a highly variable concept and the price, application, and benefits depend on multiple factors. One of these aspects is the type of environment used for your software system or platform. There are four types of cloud environments: public, private, hybrid, and community clouds.

Public Cloud Environment

Public clouds are environments hosted by a cloud service provider that rents space to multiple shared users. The security features are not as strong as the private ones, but they are cheaper due to shared cost. When one business experiences peaks, another one is consuming a lower amount of bandwidth, so the same servers can cater for multiple businesses, making them more affordable.

These environments are usually used to perform computing and storage of non-critical functions, like email, CRM, HR, and web.

Private Cloud Environment

Private cloud services are used by one client at a time, so whether or not they use the full capacity, they’ll be paying for all of it. It’s more expensive, but securer than a public environment. Clients can also configure the cloud in a way that caters best to their specific business needs.

This environment is used for mission-critical applications that carry sensitive data, like business analytics, research and development, and supply chain management.

Hybrid Cloud Environment

Hybrid solutions offer users the best of both worlds and are increasingly common in tech companies. More sensitive functions are run in a private environment while public environments are used to cater to peak demand.

Community Clouds

This is a solution that falls somewhere in between a public and private cloud. Community clouds are not fully public, but are only accessible to representatives of certain industries or segments, for example healthcare or public services.

This solution offers a greater degree of security with lower costs than a fully private environment. These communities will generally enjoy lower levels of competition to get united under one umbrella.

Generally speaking, a majority of the migration processes from on-prem to cloud or hybrid deployments use some portion of a public cloud capacity for cost optimization. On-prem bare metal machines can then serve as the protected secure vault for secret and sensitive data. For cloud-native applications, hybrid cloud use is standard.

Let’s consider the benefits of designing your software systems and networks for user growth.

Benefits of Scalability in Cloud Computing

Scalability computing has multiple advantages for companies of all sizes and stages of development, but is particularly useful for scaleups and enterprises.

Cost-efficiency

Many businesses prefer to keep their CapEx low and use OpEx cost centers more readily. This is a natural part of transitioning from bare metal to cloud computing, and leads to lower costs overall. Public cloud environments are pay-as-you-go services, so you don’t pay for idle machines.

Strong Traction

Companies that ensure their systems are capable of scalability computing will enjoy strong traction right away if their product resonates to users, without losing demand due to system downtime.

Resilience

When scaling horizontally or diagonally, you can enjoy highly resilient environments, as one congested machine is immediately substituted for another functioning one.

Faster Time-to-market and Operational Agility

Vertical and diagonal scaling specifically enables a highly agile processing environment, wherein computing is performed quickly in near-real time mode. When transitioning from on-prem deployment to any of the cloud environments, companies also enjoy faster time to market.

Security

High security standards are usually baked into the cloud service infrastructure by providers, while encryption, monitoring and a multilayered approach to authorization and security also help. Needless to say, cloud vendors know the value of security breaches to their reputation and bottom-line, so they invest heavily in security features.

Global Access

With cloud computing, team members working remotely or in the fields can access data on the fly on their mobile devices, at the same time as their peers in the office.

When Should You Use Cloud Scalability?

Now that we know just how impactful scaling in cloud computing is, let’s see if your business should integrate cloud computing into its IT structure. Consider upgrading your software architecture to include scalable functionality if:

- You are a startup with a sizable and addressable market, as well as an investment to support you through initial product development.

- Your business has dips and spikes in demand that are rather chaotic, like FinTech trading, retail sales, and weather-dependent taxi service apps.

- Your company has a steady plan of business development that suggests your users will grow in a planned manner following expansion into other markets.

- Any of the above refers to your clients, which use your software as a third-party tool.

These are just a few of major cases where a business needs to ensure that their systems are able to withstand more demand in a planned or unplanned manner without decreasing quality or increasing service outages.

How to Achieve Cloud Scalability

Achieving scaling in cloud computing is not easy, but the returns are worth the investment. The process is multi-tiered, long, and technical, but here are just a few of the principal steps:

- A well-designed architecture of the software project, thorough choice of tools and vendor selection are fundamental to the top state of system scalability.

- Legacy systems and platforms that are in the process of migrating to hybrid or fully cloud environments require thorough planning to minimize downtime and ensure seamless transition. Consider hiring a cloud migration consultant for a second opinion.

- Integrating CI/CD DevOps practices can help automate the SDLC process and make it more scalability-friendly.

- Use load balancers to distribute the workload from one node to a few nodes for increased resilience and efficiency.

- Follow the treaded path of a gradual shift from vertical to horizontal scaling to end up with a diagonal one if users flood your services.

Even though the above are good milestone recommendations, scalability is a complex network of actions and best practices, Let’s deep dive into some technicalities for illustration purposes.

How Is Cloud Scalability Helpful to you as a Consumer?

A scalable cloud computing infrastructure allows companies to quickly adjust their use of on-demand servers, depending on the number of users and transactions they need to accommodate.

However, not all companies use cloud services to their full potential.

For instance, you can use a pay-as-you-go model to minimize your peak loading costs. When your product experiences loading changes, peaks during promo campaigns or goes overcapacity during the nighttime, your cloud pricing model can adapt accordingly. At the same time, the scalability of the even traffic can be served with more affordable fixed models that fit better for such cases.

The sections below highlight different situations and options to serve them in more affordable and sustainable ways.

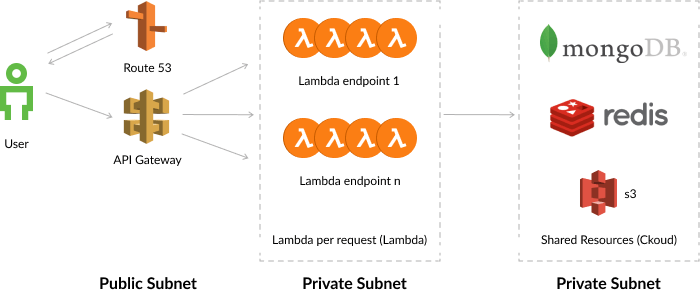

Best Practice #1: Cloud Computing Scalability for REST API

Representational state transfer application programming interface (REST API), or call them API Gateway, allows you to design APIs with various scalability options, but without the need to manage servers. API Gateway can deal with traffic management and its extensibility. But is it better to use AWS Lambda or AWS Elastic Container Service (ECS)? Let’s dive deeper.

Cloud Computing Scalability with AWS Lambda

In a nutshell, AWS Lambda is a serverless cloud computing platform provided by Amazon Web Services and is an excellent solution to address scalability challenges for small, stateless API services.

When to use:

- Short running tasks (< 29 sec)

- Small payloads (< 10 MB)

- Limited parallel requests (< 1000)

AWS Lambda cloud computing scalability benefits:

- Minimizes costs with a pay-as-you-go model: this can save you up to 90% on cloud expenses.

- Automates scalability: serverless solutions can adjust to sudden jumps in usage.

- Accelerates iterative development: no need to use continuous delivery tools; just send the code from the developer console.

- Optimizes team workflow: automation scalability frees time for your developers to work on new features and decreases administrative costs.

This solution is best used at the initial, low-traffic stage of the project, when fast setup is required.

Cloud Computing Scalability with AWS ECS

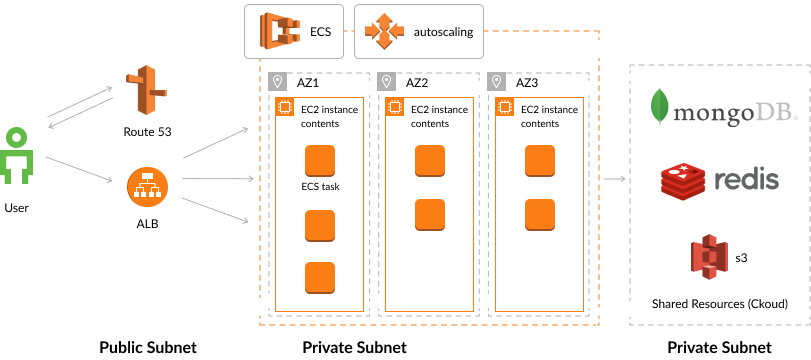

Amazon ECS enables AWS production workload. In addition to managing assignments like task placement, integration with the AWS platform and VPC networking, ECS provides users with auto-scaling features.

When to use:

- Big payloads

- Long-running tasks

- Managed scaling

- Pay per EC2 usage

- More complex app setup

Auto-scaling is powered by CloudWatch metrics available for ECS containers, such as central processing unit and memory usage. With AWS auto-scaling, you can automatically increase or reduce the task capacity of your ECS container.

With CloudWatch metrics, you can handle an enormous volume of requests by adding additional tasks as needed or removing them when the volume decreases.

ECS therefore offers cloud scalability if you expect your project to deal with significant traffic and numerous requests. This solution is well suited for infinite scale and cost-efficiency.

Best Practice #2: Cloud Computing Scalability for Background Tasks

What are background tasks? Let’s consider the checkout process for a typical online store. When a user completes a transaction, this data is to be stored according to a traditional design pattern responsible for data integrity. After data processing, the user will either receive a message that the transaction was successful or that there was an error.

Since multiple steps in the save-logic are executed on non-cloud platforms, the process will take some time due to a delay between the cloud and the back-end sync and a delay between a user and the cloud sync. To avoid these delays, your company may want to consider running these activities in the background and informing customers about the successful receiving of their requests.

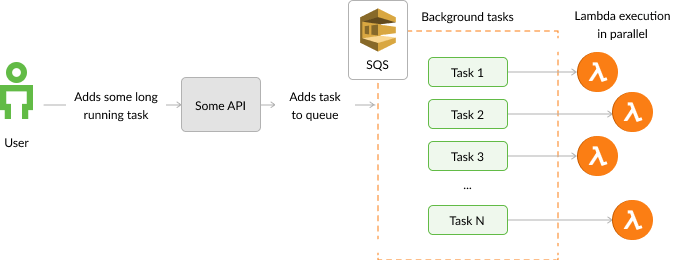

To handle this task, you can use either AWS Simple Queue Service (SQS) and Lambda or AWS and ECS.

AWS SQS and Lambda

AWS SQS assures programmatic sending of messages via web apps and allows for separate microservices for pipeline optimization.

SQS can be used as an event source for Lambda. The process is constructed as follows: a message is added to a queue, then a Lambda function is called on with an event that contains this message.

When to use:

- Short running tasks (< 15 min)

- Limited parallel requests (< 1000)

- Configured execution concurrency

SQS and Lambda are commonly used together because it improves Lambda’s call and error functions. That’s because the default concurrency cap for function calls is 1,000. If a function reaches this limit, all further calls will fail with a throttling error. By adding SQS to the process, these errors are automatically retried based on set configurations. As a result, you can avoid failures connected with sudden spikes in use.

For low-traffic projects, use SQS and Lambda together for small tasks in order to reduce costs and ensure a fast setup.

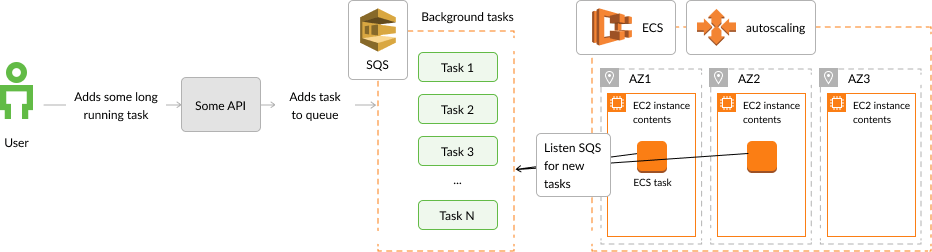

AWS SQS and ECS

If you have a service processing queue dealing with peak load moments, you may need to scale ECS. In this situation, handling additional simultaneous transactions helps process this queue faster. For this purpose, you need to use Simple Queue Service from Amazon to scale your pipeline.

When to use:

- Long-running tasks

- Managed scaling

- Complex application setup

To scale your system following this best practice, you need to use CloudWatch metric alarms again. CloudWatch alarms will continuously monitor the number of items in the queue and trigger a warning if it exceeds the threshold you specified.

The SQS and ECS combination is a perfect match for large projects due to its cost efficiency for high traffic solutions.

Best Practice #3: Horizontal Cloud Computing Scalability with MongoDB

Another option to guarantee scalability is to balance database load by distributing simultaneous client requests to various database servers. This approach reduces the burden on any specific server. By default, MongoDB can accommodate several client requests at the same time. In addition, MongoDB employs specific parallel management mechanisms and locking protocols to maintain data integrity at all times.

Horizontal scale in MongoDB splits your database into separate pieces and stores them on multiple servers. You can succeed with it by using sharding and replica sets.

MongoDB sharding provides additional options for load balancing across multiple servers called shards. In this way, each shard becomes an independent database, while the whole collection transforms into one logical database.

When to use:

- Big and complex systems

- Vertical scaling isn’t cost-efficient enough

With horizontal scale-out, you have the ability to add several new servers directly at runtime. This will reduce downtime to zero, which will positively influence database performance.

Even though horizontal scaling is cheaper than vertical scaling, there are a few things to consider. It’s necessary to ensure an efficient key distribution between shards. This approach requires a more complex configuration and is also applicable to its use.

Importance of Cloud Scalability for Your Business

Businesses at different maturity levels have different range of benefits to aspire to when planning their scaling journey:

- Get ahead of the competition by being there for your users when others have outages

- Ensure customer satisfaction, gain credibility, and loyalty

- Provide secure and reliable service at peak times, and save in the low season

- FinOps gains from system elasticity

- Take advantage of top-notch security offered global leaders in cloud services like AWS, GCP, and Azure

Regardless of which of these factors make scalability computing important for your business, one thing is for sure: if your company has growth plans, your software platform should be ready for it.

What Approach Should You Adopt for Scaling Your Cloud Services?

There is no universal answer to this question, as it depends on your initial tasks, product size, load expectations, and approved budget.

If you’re not sure what solution you should choose or have any additional concerns, drop us a line. Our Cloud and DevOps experts have proven expertise on these projects and are here to guide you.