As microservice adoption grows, DevOps architects lose sleep over how to scale microservices [MS] without increasing cloud costs much.

This type of software architecture is growing rapidly in popularity. The proportion of apps developed using microservices increased from 40% in 2019 to 60% in 2020, according to the latest NGINX survey.

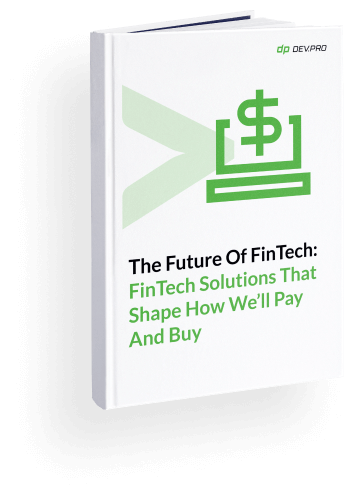

The study also reveals challenges in the adoption process, where over a quarter of respondents are concerned about scalability, while security, availability, system failure and performance top this chart.

Software system integration is one of Dev.Pro’s main capabilities, so we decided to chip in and share some tips and tricks with our audience. Let’s cover the basics first to ensure all of our readers are on the same page.

What Is Microservices Architecture?

Before providing you with the microservices definition, let’s make a short historical detour so that to give you perspective.

Systems architecture was originally monolithic, meaning all services [e.g. payment, loyalty, inventory, shipping] were part of one tightly coupled system, using the same database and servers. With the proliferation of cloud and app development, SOA and microservices emerged; both of them presented a more independent approach.

Microservices architecture is a way to structure a software product, whereby autonomous services with specific business intent are loosely coupled and connected by lightweight protocols. This type of structuring ensures higher resilience, speed, and scalability.

Originally, microservices derived from SOA and are often referred to as “SOA done right” or “finely-grained SOA.” Both of these software architecture types allow for the use of different technologies and teams for loosely-coupled services, enabling rapid growth.

How to Scale Microservices Architecture: Types and Approaches

With the ABC of the concept out of the way, it’s time to reveal the intricacies of how to scale microservices architecture.

There are two main ways to expand system capacity in microservices architecture: horizontal and vertical scaling. Both methods have pluses and minuses, let’s consider the major ones.

Vertical Scaling

Vertical scaling is a method of growing computing powers by adding more components to an existing system. Additional memory, CPU [Central Processing Unit] or disk IOPS [Input/Output Operations Per Second] can be added to extend capabilities of a certain server or VM [Virtual Machines].

On the positive side, this technique allows for faster and more consistent performance, as everything happens on one machine. However, this approach is limited in its scaling potential and presents a single point of failure.

Microservices Horizontal Scaling

Horizontal scaling involves adding more nodes to the existing set of hardware resources [like servers or virtual machines] without augmenting their initial capacity.

The advantage of this method is that loosely connected systems are more resilient to system failures and scaling can go on continually simply by adding more machines. However, network calls between the disparate machines may take more time and data consistency is weaker due to the multi-node setup.

In the scenario of slower and gradual predictable growth, the preference is given to scale vertically first and then only to multiply the updated nodes. This is called hybrid scaling.

The Scale Cube

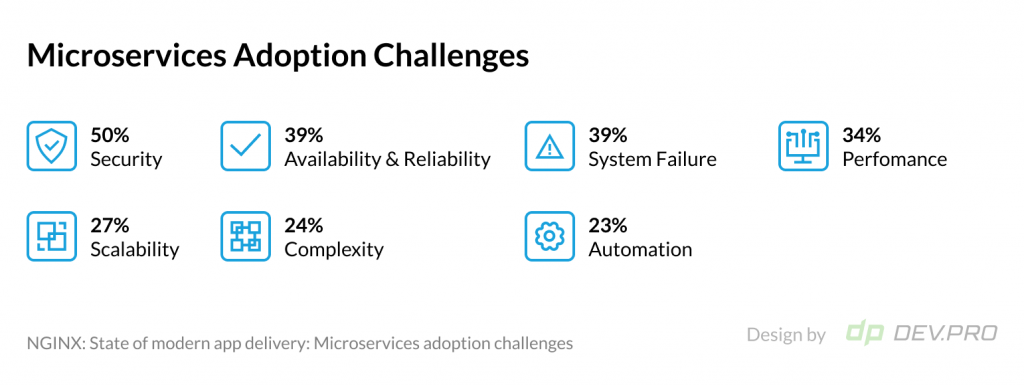

A rather singular approach to scaling is described in “The Art of Scalability,” where authors lay out a framework for a system that can expand in the shape of a cube with 3 axes: X, Y and Z.

While not all of the types described in the Scale Cube classification have to do with microservices exclusively, this conversation would be incomplete without a brief overview.

The left front bottom corner of the cube represents monolithic architecture, while the one in the right back top corner represents infinite scaling potential.

X-Axis Scaling

X-Axis scaling boils down to duplicating or cloning, it’s similar to horizontal scaling. Software engineers will replicate servers and use load balancers to distribute traffic in this case.

Y-Axis Scaling

Y-Axis scaling is about “splitting dissimilar things” or “functional decomposition” [payment, login, or order management]. Services with similar functions are split into groups, forming macro, or micro services. Under this logic, each of the services will have its own server or VM and an individual database for speed and resilience.

Z-Axis Scaling

Z-axis scaling suggests “splitting similar things” so that all split components are just like the other ones. The best example of scaling along Z-Axis is splitting along geographical borders (i.e. having one set of software systems for Germany and a similar one for the U.K.). This approach goes beyond geography, as splitting similar things can be done randomly by dividing a catalog by SKUs into parts or a user database by user_id.

Now that we’ve crossed all t’s theory-wise, let’s get down to practice and review a few of the microservices scaling best practices.

Best Practices: How to Scale Microservices

These are some of the techniques and concepts to enable a smooth process.

Microservices-First Design

This approach is not for everyone and many of the experts in the field see a well-designed monolith as the default architecture.

But if there is sufficient funding, an experienced software engineering team, and a clear understanding of the ultimate shift to the MS design, a microservices-first approach may be the right choice.

Even though you will spend more time and resources upfront designing the services, you save yourself the drama of splitting the system down when it’s in production. Needless to say, such a design is inherently more scaling-friendly too.

Tracking the Right Metrics with Right Tools

Scaling done right involves 360-degree observability and monitoring of all infrastructure components to detect early signs of emerging bottlenecks and system failures. Logging, monitoring, and tracing are more complex in this setup compared to a monolithic architecture.

Knowing what exactly needs monitoring and tracing and which tools to use helps instill threat detection procedures and ensure a transparent deployment environment.

Whether you log from a central service or individual services, tools like Splunk, Logstash, AWS CloudWatch, or ELK provide a comprehensive toolkit for the mission.

Prometheus, cAdvisor, AppDynamics, Sensu are often chosen for services and API monitoring purposes.

Dynatrace, Jaeger, New Relic, and Zipkin help DevOps engineers with microservices tracing.

Asynchronous Messaging as a Fundamental Scaling Prerequisite

Synchronous communication like REST API is not the best solution for software systems that need to scale exponentially [especially in an unpredictable manner where the workload may spike and drop dramatically over a short period of time].

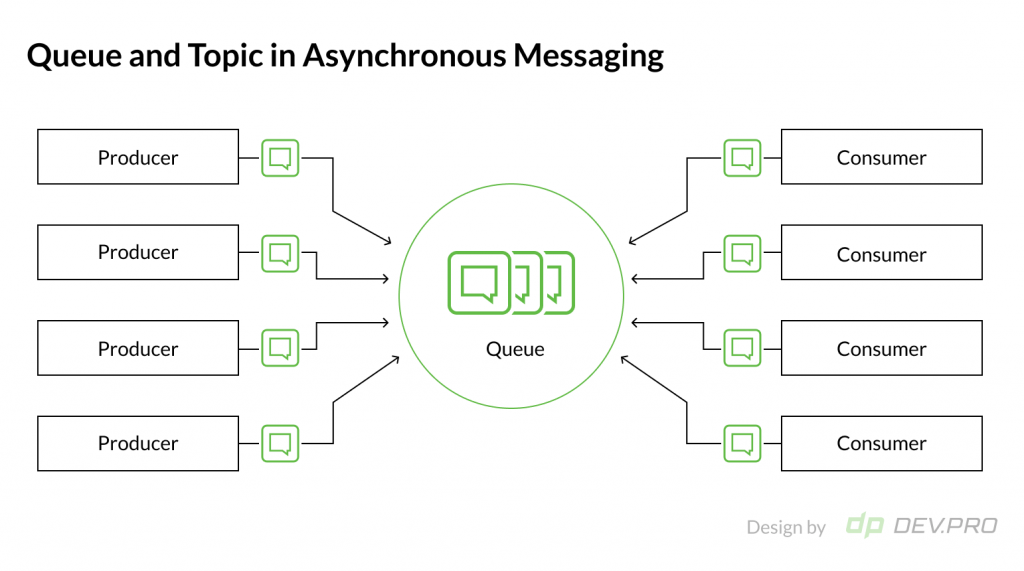

This is why asynchronous communication is often used for solutions that have fluctuating or seasonal workloads and need to be highly resilient. Under this setup, the messages wait in the queue until the receiving service is available to process them, while the sending service does not need to wait for a response. The queue operates like a buffer.

Apache Kafka and RabbitMQ are the most common choices to enable asynchronous messaging between microservices.

CI / CD Processes and Automation Help Reach Exponential Scaling

Microservices are best run in an environment where deployment and delivery are organized in a continuous manner and releases are frequent and major testing processes are automated. Continuous integration, delivery and deployment are even more critical for a solution where services need to be scaled gradually or suddenly.

How to Scale Microservices, Not Your Bills so Much

Scaling Microservices with Kubernetes

Kubernetes allows software architects to scale individual services horizontally or elastically, while an entire cluster of microservices can also expand vertically.

Elastic scaling ensures that the allocated capacity closely matches the workload. Tools like Terraform help enable autoscaling patterns when combined with Kubernetes capabilities. While the service can be deployed on-prem, major cloud providers like Amazon, EKS, GKE, and Azure offer Kubernetes-friendly tools for cloud deployment.

Allocating Individual Storage and Databases For Each Microservice

There are different views on this issue, and having a shared database for several microservices is preferable in some cases. Yet, such an infra setup has many of the same disadvantages as a monolithic architecture.

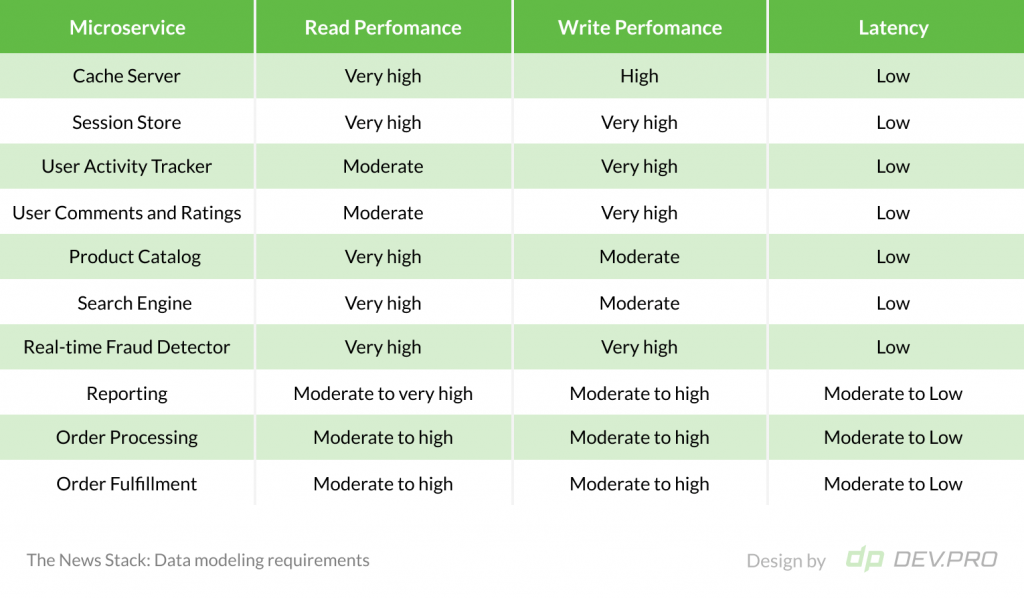

Separating storage capacities [databases and file storage service] allows one to choose a storage vendor with the right subscription plan needed for this specific service and to optimize the cost respectively. Some microservices may require moderate read and write performance, while others need to perform over a million operations per second. Some may need low latency for storing purposes, while others need high latency for real time reporting.

Choose the Right Caching Mechanism

Another way to reduce cloud costs for your project is to decrease the number of requests between the Catalog API and the services by improving your caching process. This requires careful selection between remote, near, and proxy cache mechanisms according to your project and the parameters of each microservice.

Use Spring Boot to Compress Response Messages

Compressing response messages from the microservices can help manage cloud compute capacity consumption by minimizing the size of the transported message. A compressing tool by Spring Boot enables gzip compression of the response messages, decreasing cloud instances consumption.

Instilling FinOps Culture in Your Team

Cloud Cost Management is finding its footing in the software development universe, yet many of its right-sizing and right-costing techniques are used sporadically. For example, if you have a rarely used database, you might want to move it to a cheaper tier. Similarly, it’s worth checking if other availability zones offer lower rates for your low-access frequency services.

This Cloud Cost Optimization white paper details many of the useful techniques.

Takeaways

Fierce competition among cloud service providers and the proliferation of adjacent tools ensures microservice scaling can come at a reasonable cost. Using Kubernetes, choosing the right database for your services, selecting between on-demand, reserved, and dedicated instances, and using caching to reduce cloud capacity consumption are just a few of the methods available.

On the flip side, a quick change of scenery in this fast-changing realm also keeps technical talent on their toes, especially when it comes to vendor, architecture, and scaling type selection. Your DevOps team should always be on the lookout for better and cheaper approaches, tools, and subscription plans.

Dev.Pro’s expertise is deeply rooted in system integration and has been polished in 160+ projects. If you need a second opinion on how to autoscale microservices or want to refactor from an onprem monolith to a microservices approach, we can help. Chat with our expert sales team for a no-obligation quote for your specific case.

FAQ

With over 60% of applications using this setup, the examples are manifold. Many industry leaders with hundreds of millions of Monthly Active Users enjoy the benefits of loose service coupling.

Leading companies like Uber, Netflix, Amazon, Etsy all successfully use microservices architecture in their software products.

When Microservices are designed in a way that allows cloud cost to closely follow consumed capacity, a business gets the best of both worlds: their software product is capable of sustaining higher performance with a fluctuating workload while keeping cloud costs at bay by instilling the technical levers. High resilience, data compliance, and faster time to market are also known advantages.

Spring Boot allows you to develop microservices faster due to an auto configuration feature and an opinionated config approach. This approach offers a set of predefined dependencies that a system will choose for your project based on standard templates and user input. No coding is required.

Spring Boot also provides production-ready capabilities like reporting and health checks, enabling easier scaling admin.