Performance testing best practices are critical to know and implement for the survival of the scaleups, whose business idea is good enough to attract a solid influx of users. When you have a market-proven idea, the worst thing you can do is to let a poorly tested application bring your MAU [Monthly Active Users] and app ratings down.

These figures speak for themselves:

- For each second of loading delay, website conversion rates drop by 4.4%.

- On average, only 22% of a company’s budget is devoted to QA, according to the World Quality Report. That’s a decrease from a high of 35% in 2015. This trend may be partially due to the increasing trend of automated tests — 68% of QA engineers confirm they have the automation tools they need.

In addition to being a major method for ensuring your application will withstand a sudden uptake in users, performance testing also helps companies catch bugs and errors early in the development cycle when it’s the cheapest to fix them.

Before diving into best practices for performance testing, let’s briefly outline some of its fundamental concepts, types, and metrics.

Performance Testing: Basic Concepts

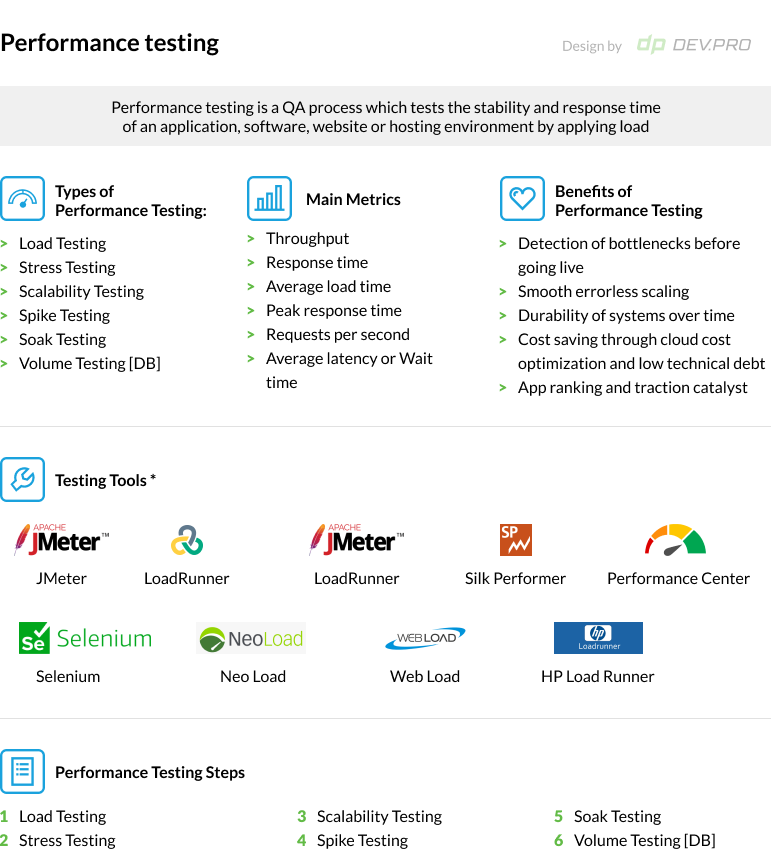

Performance testing is a QA technique designed to test the stability, reliability, scalability, and response time of an application, software, website, or hosting environment by applying load [number of users using the application at a certain time].

This process is designed to detect potential issues that a system will experience under increased user traffic without jeopardizing the actual performance in real production. It’s akin to a crash test, where a vehicle is put through extreme conditions to test its security features before the model is released into sale.

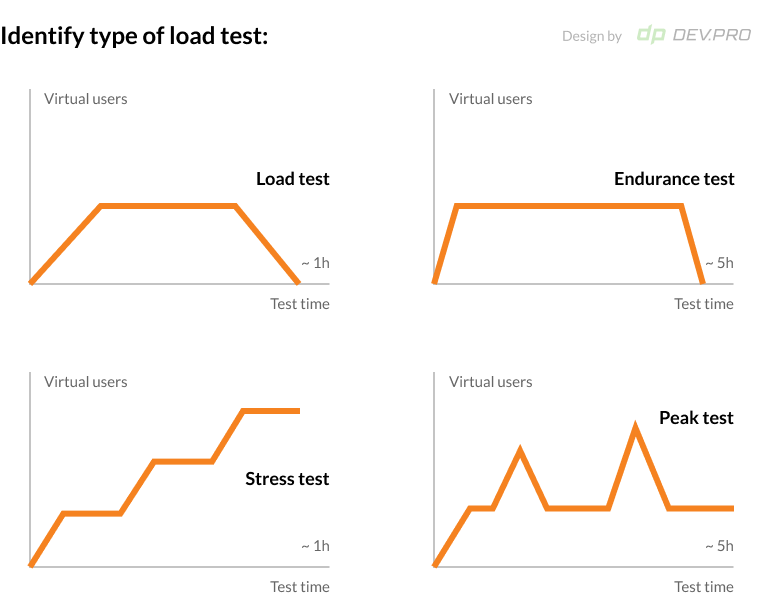

Types of Performance Testing:

- Load Testing

Checks if software can run with the intended number of users to identify potential congestion spots before going live. It also helps estimate the response time under regular load.

- Stress Testing

Checks if software can run under an extreme user load to find the breaking point of systems and its weak links.

- Scalability Testing

Checks if software can run while gradually increasing workload. This type of performance testing is used to prepare for scaling and to create a schedule for adding new capacities to systems.

- Spike Testing

Checks if software can run under extreme user load for a short period of time to identify if and how dramatic changes will impact its performance.

- Endurance Testing

Checks if software can run under designed workload for a prolonged period of time continuously without breaking and identifies what errors crop up at which point.

- Volume Testing

Volume testing is done by transferring DataBase in higher volumes than expected to test the hosting environment capacity and its breaking point.

| Test Name | Users | Time | Object Tested | Purpose |

| Load | Less or equal to designed number of users | Whatever it takes | Software / Application | To identify bottlenecks and app configurations |

| Stress | More than designed number of users | Whatever it takes | Software / Application | To identify the breaking point |

| Scalability | More than designed number of users | Whatever it takes | Software / Application | To identify when and how to add capacity to systems |

| Volume | More than designed capacity of DB [DataBase] | Storage [DB, disks] | To identify the breaking point of DB capacity | |

| Endurance | Designed number of users | Prolonged period of time, hours | Software / Application | To identify if prolonged usage reveals any errors |

| Spike | More than designed number of users, added instantly | Short period of time, minutes | Software / Application | To identify how dramatic increase impacts performance |

Benefits of Performance Testing

As mentioned before, performance testing is critical for web and mobile applications that scale fast, because both stability and response time impact MAU growth.

These are other significant advantages to integrating these kind of tests on an ongoing basis:

- Detection of bottlenecks before going live

- Smooth and errorless scaling

- Durability of systems over time

- Cost saving through cloud cost optimization and low technical debt

- App ranking and traction catalyst

Let’s review the major steps QA engineers take when designing and executing performance testing.

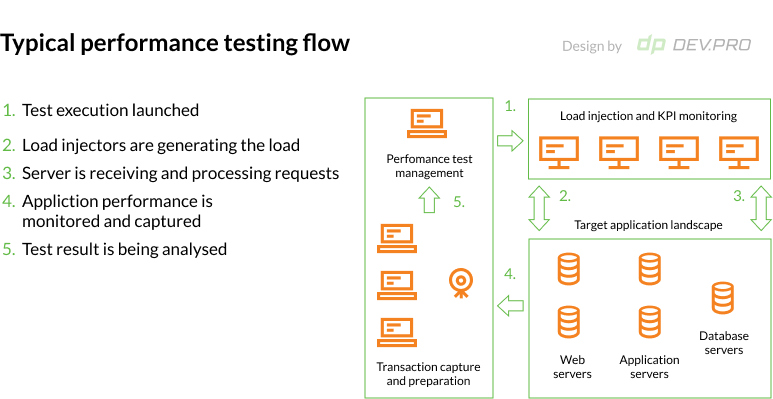

Performance Testing Steps

Depending on the type of the performance, some step may vary, but this is the regular flow of the process:

- Incorporate Gather Requirements

Gather technical information about the application or software, including:

- Application’s usage

- Technology

- Architecture

- Type of deployment and hosting infrastructure

- Which DataBase is used

- Number of intended users

- Hardware and software requirements

- Select Tools

Tool selection depends on many aspects, including the protocol used to develop the software, cost of the tool, and the number of designed users.

These tools and software solutions are used to perform peak, scalability, stress, and load tests:

- Plan the Performance Test

Choose the types of performance tests needed at this stage, decide on the hardware, test environment, engineers in charge, time frame, budgets, and goals. Define the KPIs to monitor. These metrics are the subject of study for this type of test and quality assurance activities:

- Throughput

- Response time

- Average load time

- Peak response time

- Requests per second

- Average latency or Wait time

- Develop the Test

Create use cases for select scenarios, approve them with decision makers, develop and validate the script, and prepare the test environment.

- Model the Performance Test

Build out the load model to execute the test. Validate if the target metrics are feasible.

- Conduct the Test

Execute the test gradually by increasing load to the designed maximum.

- Analyze the Outcome

Analyze all of possible results, accounting for factors like reason for failure, and how this test differs from previous ones.

- Report the Results

Provide a comprehensive documented outline of the performance test to the respective stakeholders.

Application Performance Testing Best Practices

Poor application ratings are often related to wait times or bugs, which are avoidable with proper routine performance testing. Here are some recommendations to address potential issues proactively:

Select the Right Tools for your Case

With a number of tools available to do the job, choosing the right one for your case may take some time. This is particularly true if your choice is not an open-source one, but a paid load generating software.

For example, distributed load testing on Amazon’s Fargate is only capable of running one test at a time, JMeter doesn’t support JavaScript, and Load Runner may not be the best option for dump analysis.

Only perform a Performance Test When You Have Enough Data

It’s the best practice to start with performance testing as early in the process as possible to minimize the cost of the error correction. A well-rounded understanding of the business case, expected workload, and Non Functional Requirements are must-haves for a high quality output. Get a solid understanding of the enumerated technical particulars before plunging into the testing process.

Take Measurements Between Ramp-up And Ramp-Down Time

In a real life situation, users don’t typically swarm applications. This is why measuring response time and stability in the period between after the ramp-up time and before the ramp-down time is optimal.

Include Think Time in Your Test Script For Correct Output

When designing a script, include a few periods that mimic the user’s “simply browsing” behavior. When first seeing an application, users tend to click around a bit to check out return conditions, categories available, and other bits of information. They don’t just log in, select products, pay, and check out.

Software Performance Testing Best Practices

Below are our tips on how to best test your software solutions to drive uninterrupted performance under any load.

Test Individual Modules And The Infrastructure in Its Entirety

There are many parts in the ecosystem servicing a piece of software [like DB, servers], and testing them individually as well as together is an optimal method to reveal any potential bottlenecks.

Check Your Test Before Plugging The Code in to Measure It

One of the software performance testing best practices we’re sharing comes from Continuous Delivery expert Dave Farley.

He recommends first writing the test and using it against a stub, the very minimal version of the code that is only sufficient to run it. You need to check that the test can actually measure at the rate that you are looking for to assert. Only after verifying its validity should you run your code in this test.

Use Percentile For Team Goal Setting And Results Interpretation

Averages are misleading and may lull a user into thinking everything is under control when the reality is alarming in fact. Using 50p and 90p allows for more realistic test interpretations. For bigger data sets, p99 is the most accurate.

Bill Gates walks into a bar. Suddenly everybody in the room is 70 million rich on average.

Choose Lab Tests for Big Events or Major Changes in Architecture

When facing a big change in your software architecture or an upcoming event that prompts a huge influx of users [e.g.: Prime Day for eCom], opt for a lab test over a CI/CD pipeline. It’s in these major occasions that lab-controlled testing is both impactful and worthwhile to undertake.

Cloud Performance Testing Best Practices

Cloud environments on their own are sophisticated technical ecosystems that require an individual focus and audit system. This is specifically true for complex hybrid and multi-cloud environments, especially during the times when updates come out in one of the parts of the system.

Encrypt Data for Enhanced Security

The use of encryption between load generators and controllers can be further reinforced by OTP checks [One-Time Password] to ensure no leakage occurs during the performance testing process on cloud.

Go Multi-Cloud

When using different cloud service providers for the test, not only do you get a chance to test from a wider range of geographical zones, but you also test the compatibility and stability of cloud services providers’ environments.

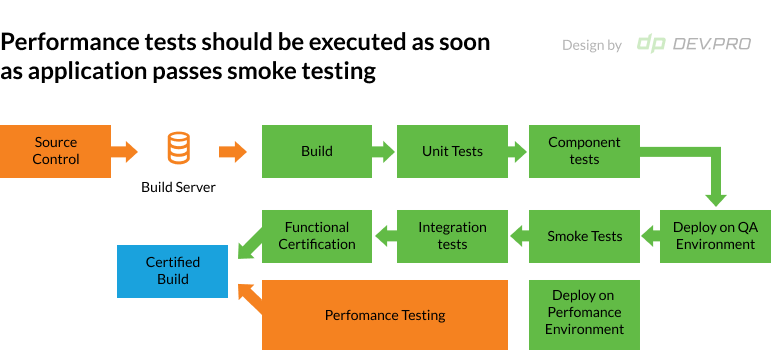

DevOps Performance Testing Best Practices

When working on your DevOps processes, performance testing can and should be integrated into your Continuous Development and Deployment routines.

Mind Your SLAs and SLOs

Service Level Agreements [SLA] are there to protect both sides: a client and a DevOps services company. If you happen to outsource QA services and a software development company does your performance testing, SLAs will protect both of you.

Gopal Brugalette of SoFi, an online financial services company, once had a case where he needed to bring up an SLA to persuade a product owner not to launch a product due to a half-a-second delay in response time, which went against the SLA terms. The cost of this delay would have been several million dollars..

Incorporate Performance Thinking Into Agile Process

Even Agile teams can incorporate performance engineering elements into their workflow, despite performance testing standing in opposition to the principles underlying Agile. For example, every story can be marked respectively if it embeds a performance impact to it. All the team has to do is to put a tick in a story, which later triggers a collaborative effort from supporting performance engineers with follow up actions.

Keep an Eye on Counts Dynamics in CI/CD

It’s not all about Response Time. Changes in the Counts metric can also reveal underlying issues in software performance. Monitoring how many calls are made to the database or third-party services per a period and how the dynamic changes overtime can help diagnose bottlenecks in the architecture.

Use A/B tests, Blue-Green, Canary Deployments to Test in Production

All of these continuous deployment methods will help integrate performance testing into the production cycle, allowing for easy rollbacks if things go wrong.

To Run or Not to Run the Test? The Risks Can Prompt The Answer

Performance Tests are a critical part of the performance engineering cycle for industries like eCommerce and HealthTech, where a lag of half a minute can have prohibitive costs.

If your software product, application, or website also belongs to the industry, where endurance, stability and latency can make it or break it for you customer satisfaction, traction, MAU, investment prospects, then consider hiring a performance engineering consultancy service. An audit can help you identify ways of implementing the performance tests into your deployment cycle, budgets, and outcomes.

With the growth of cloud technology, cloud-based performance testing can be a method of Cloud Cost Optimization, particularly for large data sets.

Dev.Pro’s performance engineers can help ensure your software is stable under its intended workload and offers user-friendly response times in a fully remote mode. Need an audit of your performance engineering needs? Tell us about your use case using this form. We’ll reply within one business day.